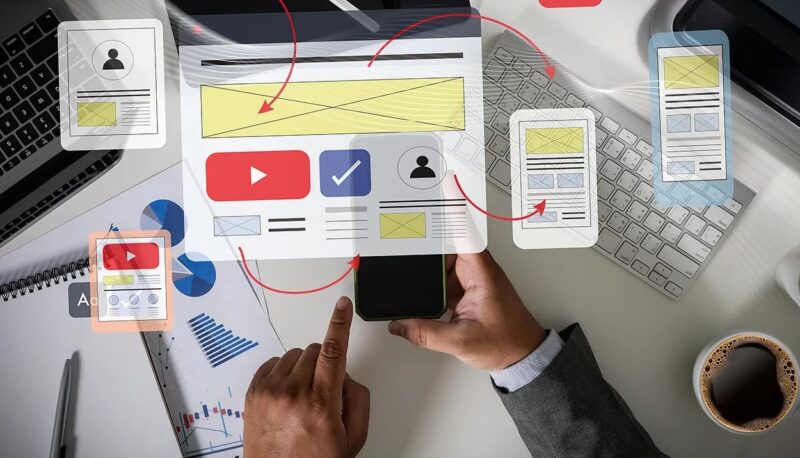

Creative testing is the backbone of predictable performance. Platforms change, audiences fatigue quickly, and what worked last month often underperforms today. Without a clear creative testing system, ad accounts drift into guesswork, random tweaks, and reactive decision making.

A structured approach to testing images, copy angles, and landing pages removes emotion from optimization.

It creates a repeatable process where insights compound instead of resetting every time performance drops.

This article outlines a practical framework for creative testing that real teams can run weekly, without burning budgets or drowning in data.

The focus is not on hacks or one-off wins, but on building a system that scales learning and improves return on ad spend over time.

Choosing the Right Platforms and Traffic Sources

Not all traffic sources behave the same way, and creative performance varies significantly across platforms. A testing system must account for this reality instead of assuming universal results.

Some platforms reward bold visuals and fast hooks, while others favor educational depth and credibility. Matching creative formats to platform expectations improves signal quality during tests.

When planning creative tests, consider:

- Audience intent level on the platform

- Creative format constraints and best practices

- Speed of feedback and data accumulation

For advertisers exploring crypto, fintech, or performance-driven display traffic, ad networks like Bitmedia offer environments where creative testing can be particularly insightful due to niche targeting options and transparent performance metrics.

Testing creatives across specialized traffic sources often reveals messaging nuances that broader platforms fail to surface.

The key is consistency. Run the same creative logic across platforms while respecting native formats. This allows teams to compare insights without contaminating results through mismatched execution.

Setting Clear Testing Goals Before Launching Ads

Before launching any creative test, the goal must be defined. Testing without a clear objective leads to confusing results and false conclusions.

Every test should answer one focused question.

Examples of valid testing goals include:

- Does a product-first image outperform lifestyle imagery?

- Does urgency-based copy convert better than educational messaging?

- Does a simplified landing page increase conversion rate?

Goals should align with the funnel stage being optimized. Top-of-funnel tests often focus on click-through rate and engagement, while mid- and bottom-funnel tests prioritize conversion rate and cost per acquisition.

It is also important to decide what success looks like before data comes in. This prevents moving goalposts when results challenge assumptions.

Clear testing goals create cleaner insights and make it easier to document learnings that inform future campaigns rather than staying locked in a single experiment.

Building a Creative Testing Framework That Scales

A scalable creative testing framework limits variables while maximizing learning. The goal is not to test everything at once, but to isolate elements systematically.

A simple framework follows this structure:

- One variable changes per test

- All other elements remain constant

- Tests run for a predefined duration or impression threshold

This approach ensures that performance differences can be attributed to the variable being tested, not random fluctuations.

Creative testing should operate in cycles. Each cycle produces insights that inform the next set of hypotheses. Over time, these cycles build a creative playbook specific to your audience and offer.

Documentation is critical. Without structured notes, teams repeat the same tests unknowingly. A shared testing log turns experimentation into institutional knowledge instead of individual memory.

Testing Images And Visual Angles Effectively

Images often carry the highest impact in paid ads, especially in crowded feeds. Testing visuals requires discipline because small differences can produce misleading results if not structured properly.

Effective image testing focuses on one dimension at a time, such as:

- Product close-ups versus lifestyle context

- Human faces versus product-only visuals

- Minimalist design versus information-dense layouts

Each image should support the same core message. Changing both the image and the message at once dilutes insight.

Did you know? Eye-tracking studies consistently show that users process images faster than text, often forming an emotional reaction before reading a single word. This makes image testing one of the fastest ways to improve ad performance without rewriting copy.

Once a winning visual direction emerges, variations can be created within that theme to fight creative fatigue while preserving the core elements that resonate.

Copy Angle Testing Without Guesswork

Copy testing is not about rewriting headlines endlessly. It is about testing different psychological angles that frame the same offer in distinct ways.

Common copy angles include:

- Problem-solution framing

- Social proof and credibility

- Scarcity and urgency

- Value comparison or cost savings

Each angle speaks to a different motivation. Testing them individually clarifies what drives action for your audience.

Copy tests should maintain consistent structure and length. This ensures results are driven by the angle, not readability differences. Even subtle wording changes can influence perception, so clarity and consistency matter.

Important note: A copy angle is not the same as copywriting style. Two ads can use identical tone and structure while appealing to entirely different motivations. Testing angles focuses on “why” someone should care, not “how” it is written.

Winning angles often translate across multiple creatives and landing pages, making them some of the most valuable insights in paid advertising.

Landing Page Testing As Part Of The Creative System

Creative testing does not stop at the ad. Landing pages are a continuation of the message, and misalignment kills conversion rates.

Landing page tests should focus on clarity and friction reduction. Common testing variables include headline framing, social proof placement, and call-to-action structure.

Below is a simple example of how landing page variations can be tested:

| Element Tested | Version A | Version B |

| Headline | Feature-focused | Outcome-focused |

| CTA Text | “Get Started” | “See Pricing” |

| Page Length | Long-form | Short-form |

After running the test, analyze results beyond conversion rate.

Time on page, scroll depth, and bounce rate help explain why one version outperformed the other. These insights feed back into ad creative, creating a closed feedback loop between ads and pages.

Budget Allocation And Test Duration Best Practices

One of the biggest mistakes in creative testing is ending tests too early or underfunding them. Without enough data, results are noise.

Set minimum thresholds for impressions or clicks before evaluating performance. While exact numbers vary by niche, consistency matters more than precision.

Avoid spreading budgets too thin across too many variations. Fewer, well-funded tests produce clearer insights than dozens of underpowered ones.

Testing schedules should be predictable. Weekly or bi-weekly cycles create rhythm and accountability. Teams know when new creatives launch, when results are reviewed, and when decisions are made.

This structure reduces emotional reactions to daily fluctuations and keeps focus on trends rather than isolated data points.

Turning Test Results Into Actionable Insights

Testing without analysis is wasted spend. The real value lies in extracting insights that inform future decisions.

After each test cycle, ask:

- What changed user behavior?

- Which assumptions were validated or disproven?

- How can this insight be reused elsewhere?

Insights should be translated into guidelines, not just reports. For example, “Lifestyle images with real people outperform studio shots for cold traffic” becomes a rule that shapes future creative production.

Share findings across teams. Creative, media buying, and product teams all benefit from understanding what messaging resonates. This alignment strengthens overall marketing effectiveness beyond paid ads alone.

Creating A Sustainable Creative Testing Culture

The most successful advertisers treat creative testing as an ongoing system, not a campaign task. It becomes part of weekly operations rather than a reaction to performance drops.

A healthy testing culture values learning over ego. Losing tests are not failures, they are data points that narrow the path to winning ideas.

Over time, this mindset reduces stress and increases confidence. Decisions feel grounded, scaling feels safer, and performance becomes more predictable.

Creative testing, when done systematically, is not about chasing trends. It is about understanding your audience deeply and building a repeatable engine that adapts as platforms and behaviors change.

When that system is in place, paid advertising stops feeling chaotic and starts behaving like a controllable growth channel.